#1 Answer all parts of this question:Question 1>(a): Explain the Chow test of parameter stability.The Chow test can help us test for the presence of structural changes or ‘breaks’ by testing whether the coefficients of two linear regressions are the same over different sets of data (for example on two time periods). | Quote: |

| The consequence of including dummy variables in regression is essentially that we estimate two or more regressions simultaneously. |

| Quote: |

Three separate regressions are run for:

the entire data set-------------------------n------RSS(N)

period before any parameter change----n1----RSS(n1)

period after at least parameter changed-n2---RSS(n2)

Each regression estimates k parameters.

The Chow test compares the residual sum of squares from a regression run on the entire data set with the residual sums of squares resulting from two separate regressions on a two-sub groups within the sample. That is, the tests compare RSS(N) with RSS(n1)+RSS(n2). If the two values are close, the same parameters appropriate fo the entire data set: the parameters are stable. The point of the F-test is to see whether the residual sum of squares (which measures the variation in the data not explained by the regression) is significantly reduced by fitting two separate regressions rather than just one.

RSS(UR) = RSS(1) + RSS(2)

F= (RSS(R)-RSS(UR))/k /((RSS(UR)/(n1+n2-2k))

Or:

F = /k / /(n1+n2-2k>

If the calculated value of the test statistic is greater than the critical value at a predetermined significance level, say .05, reject H(0); the same parameters are notappropriate for the entire sample period.

|

| Quote: |

{Computed F< Critical F}

6. Therefore, we do not reject the null hypothesis of parameter stability (i.e. no structural change) if the computed F value in an application does not exceed the critical F value obtained from the F table at the chosen level of significance (or the p value). |

But it must be determined if the error variances in the regression for n1 and n2.

Estimated error variances: Var=RSS(1)/(n1-2)

F=Var(n1)/Var(n2)

with F distribution of F(n1-k,n2-k)

| Quote: |

Note: By convention we put the larger of the two estimated variances in the numerator.

Computing this F in an application and comparing it with the critical F value with the appropriate df, one can decide to reject or not reject the null hypothesis that the variances in the two subpopulations are the same. If the null hypothesis is not rejected then one can use the Chow test. |

+++++++++++++++++++

(b) Using annual data, a consumption function has been estimated for an economy over two consecutive periods. The estimated equations and associated sums of squared residuals (RSS) are:

C(t) = 3.5 + .67*Y(t) u(t)

-------(1.9)---(.18)----------Standard Errors

1960-1979 RSS(1) = 53.6

C(t) = 7.3 + .89*Y(t) + u(t)

------(2.21)---(.23)

1980-1999 RSS(2) = 198.6

in which C is consumption expenditure and Y is personal disposable income. When the equation is estimated for the combined data set, RSS = 641.2. Using the Chow test at the .05 significance level, test the hypothesis that the parameters are stable over time.I use the following equations from the book:

RSS(UR) = RSS(1) + RSS(2)

F = ((RSS(R)-RSS(UR))/k)/((RSS(UR)/(n(1)+n(2)-2*k)

k= 2 {parameters in equation}

n(1)=n(2)=20

RSS(UR)= 252.2

F (calculated/computed) = (641.2 - 252.2)/2 / (252.2/40-4) = 389/7 = 55.57

F<2,40>@1%= 5.18

| Quote: |

6. {Page 277}

Therefore, we do not reject the null hypothesis of parameter stability (i.e. no structural change) if the computed F value in an application does not exceed the critical F value obtained from the F table at the chosen level of significance (or the p value). |

Computed F < Critical F then do not reject the null hypothesis of parameter stability.

Computed F > Critical F then we reject the null hypothesis of parameter stability.

+++++++++++++++++

(c) How might dummy variables be used to test the hypothesis that both the intercept and slope coefficients have changed?By running one regression with a Dummy Variable for both time periods we could determine the if either or both the intercept and slope coefficients have changed, for example with the following simple example:

Y(t) = a(1) + a(2)*D(t) + b(1)*X(t) +b(2)*(D(t)*X(t)) + u(t)

Where D is 0 for one period and 1 for the other period.

Thus is a(2) {differential intercept} is significant we would know that the intercept has changed and if b(2) {differential slope coefficient or slope drifter} is significant then we would know if the slope has changed between the two periods.

More precisely:

| Quote: |

| Thus if the differential intercept coefficient a(2) is statistically insignificant, we may accept the hypothesis that the two regressions have the same intercept. |

#2. (a) Explain the partial adjustment hypothesis.| Quote: |

1.1.3 This partial adjustment model is an autoregressive model which is similar to the autoregressive model formed by using the Koyck transformation {in section 1.1.2. Notice, however, that with this partial adjustment model, the disturbance term is s*u(t) and s is a constant; s*u(t), is not autocorrelated if u(t) is not autocorrelated.

1. The short run or impact reaction of Y to a unit change in X is s*b(0).

2. The long run or total response is b(0). An estimate of b(0) can be obtained by dividing the estimate of s*b(0) by one minus the estimate of (1-s). |

Gujarati Book page 675| Quote: |

| The partial adjustment model resembles both the Koyck and adaptive expectation models in that it is autoregressive. But it has a much simpler disturbance term: the original disturbance term u(t) multiplied by a constant s. But bear in mind that although similar in appearance, the adaptive expectation and partial adjustment models are conceptually very different. The former is based on uncertainty (about the future course of prices, interest rates, etc.), whereas the latter is due to technical or institutional rigidities, inertia, cost of change, etc. However both of these models are theoretically much sounder than the Koyck model. |

++++++++++++++++

(b) Using the long run demand for money function:ln(exp(M(t))) = b(1) + b(2)*ln(R(t)) + b(3)*ln(Y(t)) + u(t)in which exp(M) is the desired demand for real cash balances, R is a long term interest rate and Y is real national income, together with the logarithmic partial adjustment hypothesis:

ln(M(t)) - ln(M(t-1)) = ad{ln(exp(M(t))) - ln(M(t-1))}in which M is real cash balances,

derive the short run demand for money function.++++++++++++++++++

(c) The following demand for money function was estimated with annual data over the period 1960-1000 (40 observations):ln(M(t)) = -3.21 - 0.45*ln(R(t)) + 0.65*ln(Y(t)) + 0.25*ln(M(t-1))

-----------(2.65)-----(0.07)----------(0.20)-----------(0.02)

(Standard errors)

R^2 = 0.987------DW = 1.5(i) Calculate and interpret Durbin's h statistic.h =approx= <1-d/2>*sqrt(N/(1-N*var(lamda)^2))

<1-.75>*sqrt(40/1-40(.0004))

= 1.60???

(II) Derive an estimate of the adjustment parameter and interpret it.(iii) What is long-run income elasticity of the demand for money and what does it tell us?(iv) What is the long-run interest elasticity of the demand for money.Question #3|Sample Examination|Unit 3(a) In the context of a simultaneous equation model explain, explain clearly the differences between:

(i) endogenous, exogenous and predetermined variables:Exercise 1.b| Quote: |

| Endogenous variables are those variables whose values are determined by the solution to the simultaneous equation system representing the economic model. The values of exogenous variables are not determined by the solution to the model, but are provided by information supplied from outside the model. Predetermined variables are exogenous variables (current or lagged values) plus any lagged endogenous variables. |

So predetermined are any exogenous variable as well as any lagged endogenous variable.

(ii) behavioural equations, equilibrium conditions and identities:Unit 3 Pages 3-4| Quote: |

| A model is a set of equations describing hypotheses about economics relations. Equations may be of three kinds: first, they may be definitions or identities, setting up identities between variables; Y=C+I+G, the second equation in the model (3.4), is of this type. Note that an identity is not an equation to be estimated by econometric procedures and it does not include a random disturbance term; it simply defines an equality. Secondly, equations may be behavioural, showing the assumptions made about the way in which economic agents, or groups of economic agents behave: in (3.3) and (3.4) the demand and supply functions and the consumption function are of this kind. These behavioural equation are the ones with parameters to be estimated by econometric methods from real world data. Finally, model equations may state equilibrium conditions; see, for example, the third equation of the market model (3.3) which states that for equilibrium in the marketplace the quantity demanded must equal the quantity supplied. |

(iii) the structural and reduced forms of the model:Exercise 1.c| Quote: |

| The structural form of the model represents a theory or hypothesis about the relationship in an economy or some part of an economy. The equations of the structural form may be behavioural equations, identities or equilibrium conditions, and the variables of the structural equations can endogenously or exogenously determined. The reduced form of the model is the set of equations that express the endogenous variables of the model in terms of the predetermined variables. The equations in the final form are similar but, for each equation, only lagged values of the left-hand side endogenous variables are permitted on the right-hand side. |

+++++++++++++++++

(b) Consider the following market model:Qd(t) = a(1) + a(2)*P(t) + a(3)*Y(t)

Qs(t) = b(1) + b(2)*P(t) + b(3)*P(t-1)

Qd(t) = Qs(T)in which Qd(t), Qs(t) and P(t) are endogenous and Y(t) exogenous.

(i) Find the reduced form of the model.***Section 1.4

****Answer 3*****

(ii) Find the final form of the model.***Section 1.5.1

(iii) Explain what determines the stability of the model.Section 1.5.3 and answer 5 & 6| Quote: |

...we know that the system will be stable if the coefficient on the lagged endogenous variable has an absolute value less than one.

...

If ... this expression will be negative .... then it will follow an oscillating path, tending to a stable equilibrium only if the absolute value of the numerator is less that the absolute value of the denominator. |

#4(a) Explain carefully the meaning and significance of saying that an equation is 'identified'.| Quote: |

19.2 The Identification Problem (Page 739)

By the identification problem we mean whether numerical estimates of the parameters of a structural equation can be obtained from the estimated reduced-form coefficients. If this can be done, we say that the particular equation is identified. |

To get a defined answer (unique answers) to a set of equations we must have enough variables in each equation so that each equation is identified but not too many so that a unique answer is not possible with more than one result.

Answers to Exercise 1:| Quote: |

(a) The 'identification problem' refers to the question of whether numerical values for the parameters of the structural equations of a model can be determined from the estimated reduced form coefficients.

(b) (i) A structural equation is exactly identified if unique numerical values for its parameters can be obtained from the reduced form coefficients (rfc).

(ii) not identified if it is impossible to obtain numerical values for the structural parameters from the rfc.

(iii) overidentified if more than one set of numerical values can be calculated for the structural parameters from the rfc. |

+++++++++

(b) Explain carefully the order and rank conditions for identification.+++++++++++

Consider the following simultaneous equations system:

Y(1t) = a(1) + a(2)*Y(3t) + u(1t)

Y(2t) = b(1) + b(2)*X(1t) + b(3)*Y(1t) + b(4)*Y(3t) + u(2t)

Y(3t) = l(1) + l(2)*X(2t) + l(3)*X(3t) + l(4)*Y(2t) + u(3t)in which the Y(i) are endogenous variables, the X(i) are exogenous variables and the u(i) are disturbances.

(c) Use the order and rank conditions to examine the identification of the equations.++++++++++

Consider the following simultaneous equations system:

Y(1t) = a(1) + a(2)*Y(3t) + u(1t)

Y(2t) = b(1) + b(2)*X(1t) + b(3)*Y(1t) + b(4)*Y(3t) + u(2t)

Y(3t) = l(1) + l(2)*X(2t) + l(3)*X(3t) + l(4)*Y(2t) + u(3t)in which the Y(i) are endogenous variables, the X(i) are exogenous variables and the u(i) are disturbances.

(c) Use the order and rank conditions to examine the identification of the equations.+++++++++++

(d) What are the implications for identification if b(4) = 0.#5. (a) What are the principal characteristics of a recursive model? How would you obtain estimates of the parameters?That there is a sequence of equations where the first equation does not have any endogenous variables including in its regression and each subsequent equation only has the preceding endogenous variables included in its regression. Thus we could say unidirectional causality and none bidirectional causality.

| Quote: |

1.2

A recursive model has the characteristic that the equations can be ordered in a specific way. The endogenous variable in the first equation is determined only by exogenous variables. The dependent variable in the second equation is determined by the endogenous variable in the first equation and exogenous variables-but not by any other endogenous variable. The dependent variable in the third equation is determined by the endogenous variables in the first two equations and exogenous variables-but no other endogenous variable enter as a regressor.

<<>>

There are two crucial requirements. First there is no feedback from a higher level endogenous variable to one lower down the causal chain. Secondly, the disturbances are assumed independent...

...

Furthermore, if none of the equations have lagged dependent variables, OLS estimators are unbiased. |

++++++++++++

(b) Explain carefully one method for estimating the parameters of an overidentified equation in a system of simultaneous equations.1.4 Overidentified Equations and 2SLS:Unit 5 Page 6, 1.4 | Quote: |

When an endogenous variable appears as a regressor it is correlated with the disturbance term. The basic idea behind 2SLS is to replace the stochastic endogenous regressor with one that is non-stochastic and consequently independent of the disturbance term. The method is called two-stage least squares for the obvious reason that least squares estimation is applied in two stages. For the equation to be estimated:

Stage 1: Regress each endogenous regressor on all of the predetermined (exogenous and lagged endogenous) variables in the entire system, using OLS. That is, estimated the reduced form equations. Calculate the predicted values of the endogenous variables. This yields, for example, Y(t) = est(Y(t)) + est(u(t)) in which est(u(t)) are estimated residuals which are uncorrelated with est(Y(t)) (proof p 59 Gujarati).

Stage 2: The predicted values are used as proxies or instruments for the endogenous regressors in the original equations

The method of 2SLS has been widely used in empirical work. It generates estimates which are biased but consistent and which are relatively robust in the presence of specification errors. It is desirable, however, that the R^2 for the estimated reduced form equations in stage 1 are not too low. ... For an equation that is exactly identified, 2SLS yields estimates identical to those obtained by ILS and so 2SLS is usually applied to all identified equations. |

++++++++++

(c) What are the main features of a two-stage least squares (2SLS) estimators?Gujarati Book page 773| Quote: |

Note the following features of 2SLS.

1. It can be applied to an individual equation in the system without directly taking into account any other equation(s) in the system. Hence, for solving econometric models involving a large number of equations, 2SLS offers an economical method. For this reason the method has been used extensively in practice.

2. Unlike ILS, which provides multiple estimates of parameters in the overidentified equations, 2SLS provides only one estimate per parameter.

3. It is easy to apply because all one needs to know is the total number of exogenous or predetermined variables in the system without knowing any other variables in the system.

4. Although specifically designed to handle overidentified equations, the method can also be applied to exactly identified equations. But then ILS and 2SLS will give identical estimates.

5. If the R^2 values in the reduced-form regressions (that is, Stage 1 regressions) are very high, say, in excess of 0.8, the classical OLS estimates and the 2SLS estimates will be very close. But this result should not be surprising because if the R^2 value in the first stage is very high, it means that the estimated values of the endogenous variables are very close to their actual values, and hence the latter are less likely to be correlated with the stochastic disturbances in the original structural equations. If, however, the R^2 values in the first-stage regressions are very low, the 2SLS estimates will be practically meaningless because we shall be replacing the original Y's in the second-stage regression by the estimated est(Y)'s from the first-stage regressions, which will essentially represent the disturbances in the first-stage regressions. In other words, in this case, the est(Y)s will be a very poor proxies for the original Y's.

6. Notice that in reporting the ILS regression in (20.3.15) we did not state the standard errors of the estimated coefficients (for reasons explained in footnote 10). But we can do this for the 2SLS estimates because the structural coefficients are directly estimated from the second-stage (OLS) regressions. There is, however, a caution to be exercised. The estimated standard errors in the second-stage regressions need to be modified because, as can be seen from Eq. (20.4.60), the error term u(t)* is, in fact, the original error term u(2t) plus b(21)*est(u(t)). Hence the variance of u(t)* is not exactly equal to the variance of the original u(2t). However, modification required can be easily effected by the formula given in Appendix 20A, Section 20A.2.

7. In using the 2SLS, bear in mind the following remarks of Henry Theil:

| Quote: | The statistical justification of the 2SLS is of the large-sample type. When there are no lagged endogenous variables...the 2SLS coefficient estimators are consistent if the exogenous variables are constant in repeated samples and if the disturbance(s) ...are independently and identically distributed with zero means and finite variances...If these two conditions are satisfied, the sampling distribution of 2SLS coefficient estimators becomes approximately normal for large samples...

When the equation system contains lagged endogenous variables, the consistency and large-sample normality of the 2SLS coefficient estimators require an additional condition...that as the sample increases the mean square of the values taken by each lagged endogenous variable converges in probability to a positive limit...

If the not independently distributed, lagged endogenous variables are not independent of the current operation of the equation system..., which means these variables are not really predetermined. If these variables are nevertheless treated as predetermined in the 2SLS procedure, the resulting estimators are not consistent. |

|

#6. (a) Explain what is meant by:(i) a stationary time series| Quote: |

The time series x(t) is weakly (covariance) stationary if the following three properties hold:

1. the mean is constant through time, E = m for all t

and

2. the variance is constant through time, var(x(t)) = E^2 = var^2 for all t

and

3. the covariance depends only upon the number of periods between two values

cov(x(t), x(t-k))= E<(x(t) - m)*(x(t-k) - m)> = lamda(k), k<>0 for all t.

If either 1 or 2 or 3 is not true then the times series, x(t), is nonstationary. |

(ii) a random walk with drift.| Quote: |

Consider the process:

x(t) = a(0) + x(t-1) + e(t)

{with a(0) being the drift part of the equation either being negative or positive drift and e(t) giving the randomness of the value added to last x.}

and the initial value of x at time t=0, x(0), is fixed. The current value of the series is the sum of a fixed increment, a(0) the previous value and a purely random element. The random walk with drift may be viewed as an autoregressive model with an intercept and an intercept and a coefficient of one on the lagged variable. |

++++++++++++

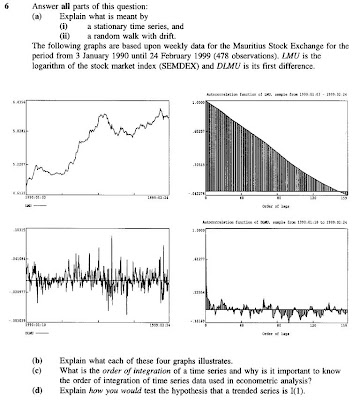

The following graphs are based upon weekly data for the Mauritius Stock Exchange for the period from 3 January 1990 until 24 February 1999 (478 observations). LMU is the logarithm of the stock market index (SEMDEX) and DLMU is its first difference.

(b) Explain what each of these four graphs illustrates.Graph 1 (upper left):

(b) Explain what each of these four graphs illustrates.Graph 1 (upper left):Random Walk with a positive drift it appears to be. Not trend stationary with more rises and falls over time periods versus the Trend Stationary white noise along a visible trend. Figures 8 and 13 show this.

Graph 2 (upper right):We are looking at the order of lags to see how quickly autocorrelation drops to zero, and in this example it takes quite a few. Thus we can see that the original time series is not stationary. Figure #20 is most similar.

Graph 3 (lower left):This is the first difference of out time series which results in a white noise graph above and thus stationary the first order difference. Figure #15 shows this effect.

Graph 4 (lower right):As figure #18 shows an AR(1) with a factor of .8 of the autocorrelation of the first difference.

+++++++++++++++++

(c) What is the order of integration of a time series and why is it important to know.| Quote: |

1.2.1 Integrated Series:

If x(t) is a nonstationary series and if delta(x(t)) is said to be integrated of order 1, which is denoted by I(1). Notice that both the random walk and the random walk with drift, discussed in Sections 1.1.3 and 1.1.4 respectively, are I(1) series.

| Quote: | Definition: Order of Integration

In general, if a series must be differenced a minimum of d times to generate a stationary series then it is said to be integrated of order d, denoted I(d).

|

For an economic time series, we usually only consider the possibilities of it being I(1) or I(2). If a series is I(1), it is also said to be Difference-Stationary (DS) or a Difference Stationary Process (DSP). A stationary series is integrated of order zero, I(0). The first difference of an I(0) series is also I(0). |

Gujarati Book page 804-805; 21.6 Integrated Stochastic Processes:| Quote: |

The random walk model is but a specific case of a more general class of stochastic processes known as integrated processes. Recall that the RWM without drift is nonstationary, but its first difference, as shown in {21.3.8}, is stationary. Therefore, we call the RWM without drift integrated or order 1, denoted as I(1). Similarly, if a time series has to be differenced twice (ie, take the first difference of the first differences) to make it stationary, we call such a time series integrated of order 2. In general, if a (nonstationary) time series has to be differenced d times to make it stationary, that time series is said to be integrated of order d. A time series Y(t) integrated of order d is denoted Y(t) I(d). If a time series Y(t) is stationary to begin with (i.e., it does not require any differencing), it is said to integrated of order zero, denoted by Y(t) I(0). Thus, we will use the terms "stationary time series" and "time series integrated of order one" to mean the same thing.

Most economic time series are generally I(1); that is, they generally become stationary only after taking their first differences {and not more}. |

++++++++++++

(d) Explain how you would test the hypothesis that a trended series is I(1).This is actually a two step process possibly.

The first step is to run a Dickey-Fuller regressions test. From the time series plot and the autocorrelation function graph we want to check to see if there is a trend and if in question we will choose the DF with linear trend (lower portion of tests). With a higher emphasis on SBS and HQC than the AIC results we would pick the results that have the highest numbers (lower negatives) to check the DF/ADF(X) test statistic of that line. And if the calculated value (value of line just chosen) is greater than the critical value provided by the test statistic (95% critical value for the aDF statistic) the null hypothesis of I(1) is not rejected, which then the series is nonstationary.

So we have not rejected the null hypothesis that the series is I(1) but this does indicate that the series is I(2) or greater, although this possibility is very unlikely.

First we obtain the first difference of a series as in example:

DLUS=LUSS-LUSS(-1)

And again we run the Dickey-Fuller regressions and look at the first part of the table since the model does not include an intercept. And as before if the calculated value of the DF statistic (on line lowest in chart) is less than the critical value at the 95% indicated below chart then the null hypothesis of the series is I(2) is rejected and from before we accept the null hypothesis on both tests and conclude the series is I(1).

**********Insert the hypothesis tests from study book Pages 24-27???************

#7. (a) What is meant by spurious regression?| Quote: |

1.1 Spurious Regression

Regression analysis may suggest a relationship exists between two or more variables when no causal relationship exists. This is called a nonsense or spurious regression. A classical example was provided by the statistician Yule in 1926. Using annual data for the period 1866-1911, he found a positive relationship between the death rate and the proportion of marriages in the Church of England. This implies that closing the church would result in immortality-clearly nonsense! |

But it is quite possible that a third factor could be influencing both deaths and marriages. It also would have been interesting if the Granger Causality could find which direction if any the links are. If higher deaths leads to higher marriages, could be just a factor of people facing their own mortality.

From book pages 806-807:

| Quote: |

| Yule showed that (spurious) correlation could persist in nonstationary time series even if the sample is very large. That there is something wrong in the preceding regression is suggested by the extremely low Durbin-Watson d value, which suggests very strong first-order autocorrelation. According to Granger and Newbold, an R^2> d is a good rule of thumb to suspect that the estimated regression is spurious,... |

+++++++++++++

(b) What is the relationship between spurious regression and cointegration?Section 1.2

To check to see if there is a causal relationship between two I(1) or any two I(x) time series we check to see if they are "cointegrated" and if not then we would assume a spurious relationship (nonsense). To prevent spurious regressions we check for cointegration.

| Quote: |

| More formally, if x(t) and y(t) are both I(1) {or I(x)} and there exists a linear combination y(t) - (la(1) + la(2)*x(t)) which is I(0) then x and y are cointegrated. |

| Quote: |

| However, it has been shown that if x and y are I(1) and cointegrated, then the OLS estimator of the slope coefficient is superconsistent. What do we mean by this? With I(1) series and cointegration, the sampling distributions of the OLS estimators collapse to their true values at a faster rate than is the case with I(0) series and where all of the classical assumptions are valid. That is, the OLS estimators of the lambdas converge in probability to their true values faster in the nonstationary case that in the stationary case! OLS estimators are consistent and very asymptotically efficient. |

+++++++++++++

(c) Explain a test of cointegration based on OLS residuals.In the Error Correction Models if H(0): b(3)=0 then there is no cointegration derived from the ECM t statistic where b(3) is used for the differences in the lagged variables (e.g. (y(t-1)-x(t-1))

| Quote: |

In general, a linear combination of two I(1) series is I(1). Therefore, is the series x and y are not cointegrated, the residuals, e(t) will be I(1). If x and y are cointegrated then the e(t) will be stationary and we would expect them to behave like an I(0) process. Therefore, we have:

H(0): x and y are not cointegrated, the residuals are I(1); against

H(1): x and y are cointegrated, the residuals are I(0). |

The two tests that do answer this question is from Cointegrating Regression Dickey Fuller (CRDF) Test (Section 1.3.1.1) and the Cointegrating Regression Augmented Dickey-Fuller (CRADF) Test (Section 1.3.1.2).

++++++++++++

(d) Explain the nature of first-order error correction model (ECM). Section 1.3.2 *******************

#8. (a) Explain carefully how single equation econometric models can be used to generate forecasts.It first must be decided if the model is static or dynamic. Section 1.2.2 and 1.2.3

We need to forecast an exogenous variable which we can derive from judgmental information or from ARIMA but whatever the case..."forecasts from an econometric model are always conditional forecasts -- conditional on the assumed values of fore(S(T+1).

Without an error term as in:

for(Y(T+1) = est(a) + est(b)*for(X(T+1))

but if we want to add a "judgmental adjustments" which can be thought of as a forecast of the error term in T+1 as follows:

for(Y(T+1) = est(a) + est(b)*for(X(T+1)) + for(e(T+1))

| Quote: |

There are several reasons for adding this judgmental adjustment:

* The pure forecast may not look plausible. Adding or subtracting a bit may make it look more plausible.

* The forecaster may have information about things that are likely to happen which have not been included in the model, for example strikes, or changes in government policy. {I would think one time events or structural changes that no model could predict all possible outcomes.}

*The forecaster may believe that recent errors are going to persist and so set for(e(T+1)) equal to the value of the error in the last period or to an average of recent errors. {Judgment as to whether the bias will continue or not.} |

Forecasting with a Single Equation Dynamic Econometric Model:| Quote: |

The forecast for Y(T+1) is calculated in exactly the same way as with the static model

for(Y(T+1) = est(a) + est(b)*for(X(T+1)) + lam(Y(T))

but when we forecast T+2 and subsequent periods, we use the previous period farecast as we did in the AR model:

for(Y(T+2) = est(a) + est(b)*for(X(T+2)) + lam(Y(T+1))

This is known as a dynamic forecast because it uses the forecasts from the previous period on the right hand side. |

++++++++++++

(b) The following ARIMA(1,1,0) model has been estimated to period T.

delta(Y(t)) = m + est(p)*delta(Y(t-1)) + est(u(t))

How can this model be used to create forecasts?Exercise 2.

To calculate the next period T+1 we would use the factors from our regression and calculate the following:

fore(delta(Y(T+1)) = m + est(p)*delta(Y(T))

This is possible since we have Y(T) which is the last number in our sequence and we did not include an error term (judgmental term) then this was our simple estimate.

In terms of level (value of fore(Y(T+1))) we use the following:

fore(Y(T+1) = Y(T) + fore(delta(Y(T+1))

The last term was calculated above.

Then these numbers could be used for forecast T+2

+++++++++++++++

(c) Explain any three measures of forecast accuracy and discuss their relative merits.Section 1.4 in Unit 8.

Printout...

Labels: Education